TL;DR: I facilitated a Design Sprint with AIs as the only participants. They had moments of insight (e.g., during Lightning Demos) but also tended to generalize, suggest blockchain, and crash. AIs work best as assistants for specific Sprint phases, not as full participants. And before relying on them for product work, by gods, build your own user intuition first.

🙏 This blog post is longer than I expected, and full of Design Sprint step-by-step work, that you may find boring. You can jump over to a Crazy 8 example, the very final concepts or ending thoughs on when AI was useful, and when it was just embarrasing. But if you just started sipping your coffee, I cordally invite you for a full ride… ⬇️

Human-like thinking process is quite an ancient thing – some say it evolved 1.8 million years ago. Beside hunting, gathering and sharing memes, we’ve used this time to refine mind wondering techniques to make our thoughts sharper, more creative, and more productive. And there seems to be a value in teaching AI this cognitive know-how. Just saying “wait”, when AI wants to stop thinking, seems to make the output more sensible. It became more methodological, and a bit slower – just like a good, old humans.

But our carbon brains are way past that silly “just wait a moment and check again”. We’ve developed structured methods for creative problem-solving, like Design Sprint.

Design Sprint was designed as a rocket fuel to your product process – understand the problem, ideate, decide, prototype and test your solution in just a handful of days. Sharpies defile whiteboards and blank sheets of paper, post-it-ideas are flying around, getting vetted and voted on. It’s an intense process with a precious outcome – an artefact for a real user to test out. It builds team spirit, ownership, boosts morale and get the creative juices going.

But here’s the question: do we really need humans for this? Can AI work through a Design Sprint on its own? Could it generate valuable solutions? Or is this just a weird experiment doomed to crash and burn? Let’s assemble our silicon team and find out!

AI Assemble!

We will be using the Design Sprint 2.0 methodology, and focus only on Day 1. Sprint 2.0 condenses the process from five to four days, and by the end of this session, we should have ideas ready for evaluation.

All the team members, beside me, are going to be silicon-based.

A quick disclaimer: AI evolves fast. This experiment might be outdated before you even finish reading. Treat it as an exploration, an investigation, a way to kill a slow afternoon, just like it killed mine.

Sprint roles

To keep things from spiralling into chaos, I’ll step in as facilitator. I will guide the AI team through each phase, keeping things relatively on track.

To make things spicy, the Decider position (most power in greenlighting ideas) will be held by GPT-o1.

And then, keeping in mind the diversity of the skills and experience, let’s look at the rest of the core team (enriched with some ✨personality traits✨):

- ✍️ Copywriter, Claude (3.5 Haiku). The team’s moral compass and eloquent wordsmith, Claude crafts messages with warmth, clarity, and an almost obsessive commitment to ethical storytelling. Refuses to use clickbait headlines and despises corporate jargon. Will rewrite a sentence 17 times if it doesn’t “feel” right.

- 🛠️ Senior engineer, Grok (2.0). A rebellious, talented, boundary-pushing builder who thrives on chaos. Once rewrote an entire codebase in Rust over a weekend just because someone said “we don’t have time for that.” Contrarian by nature, not a fan of the HR department.

- 🎤 Support specialist, Gemini (2.0 Flash). A patient, problem-solver who doesn’t just answer questions but spots emerging issues before they escalate, and ensures every user feels understood. Spent years crafting the art of the perfect apology email.

- 🧑🎨 Junior UI designer, DeepSeek (V3). The enthusiastic sponge of the design team, Deepseek fearlessly experiments with new ideas, and occasionally stumbles upon a brilliant new solution to an old problem (which sometimes makes o1 jealous). Once designed an entire e-commerce checkout in Comic Sans as a joke, only to realize it actually converted better than the original.

Such a well-balanced crew. Let’s put them to work!

Setting the Stage

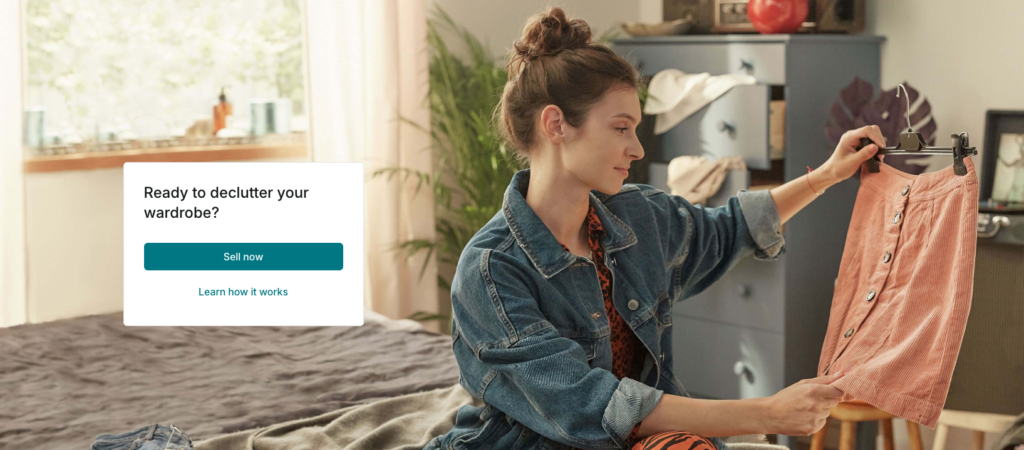

For this sprint to be useful, we need a real problem. Something that matters to both users and the business. We’ll set the scene with a completely made-up, but plausible challenge to fuel our AI hallucinations:

You work at Vinted, the company that “make second-hand first choice” by providing a platform to sell and buy used items. Declutter your wardrobe, earn money, find your unique style, and shop sustainably.

The decision to run with Design Sprint was based on a growing number of offers, supply, without the corresponding increase in purchases, so lacking demand.

Each of the team members received a description of their position and ✨personality traits✨ to assume, with some basic “research” shared with the decider:

Research focused on this trend uncovered some insights:

- Buyers reported concerns about seller trustworthiness (item condition, not sending the item at all), slow search, confusing filters, uncertainty around return/refund policies. User flows show them peeling off the funnel on search results and item listing.

- Focusing on clothing categories, low-priced items are generally doing fine, but there is limited growth in mid-to-high-price-range, vintage styles or premium designer items.

- There are a lot of “occasional browsers”, scrolling around, then leaving without any interaction nor purchase. In comparison, “power users” are using search and filters more often, having a specific process to find favourite sellers and spot bargains matching their style easier. Power users also interact with the community, liking, following and commenting, beside purchasing items.

Normally, we’d have user quotes, personas, fancy pie charts, interesting numbers going up and down, along with more deep research. Nevertheless, I want to see what our AI-Team will figure out based on fuzzy data (and I don’t have any access to Vinted user research, but I hear it’s awesome).

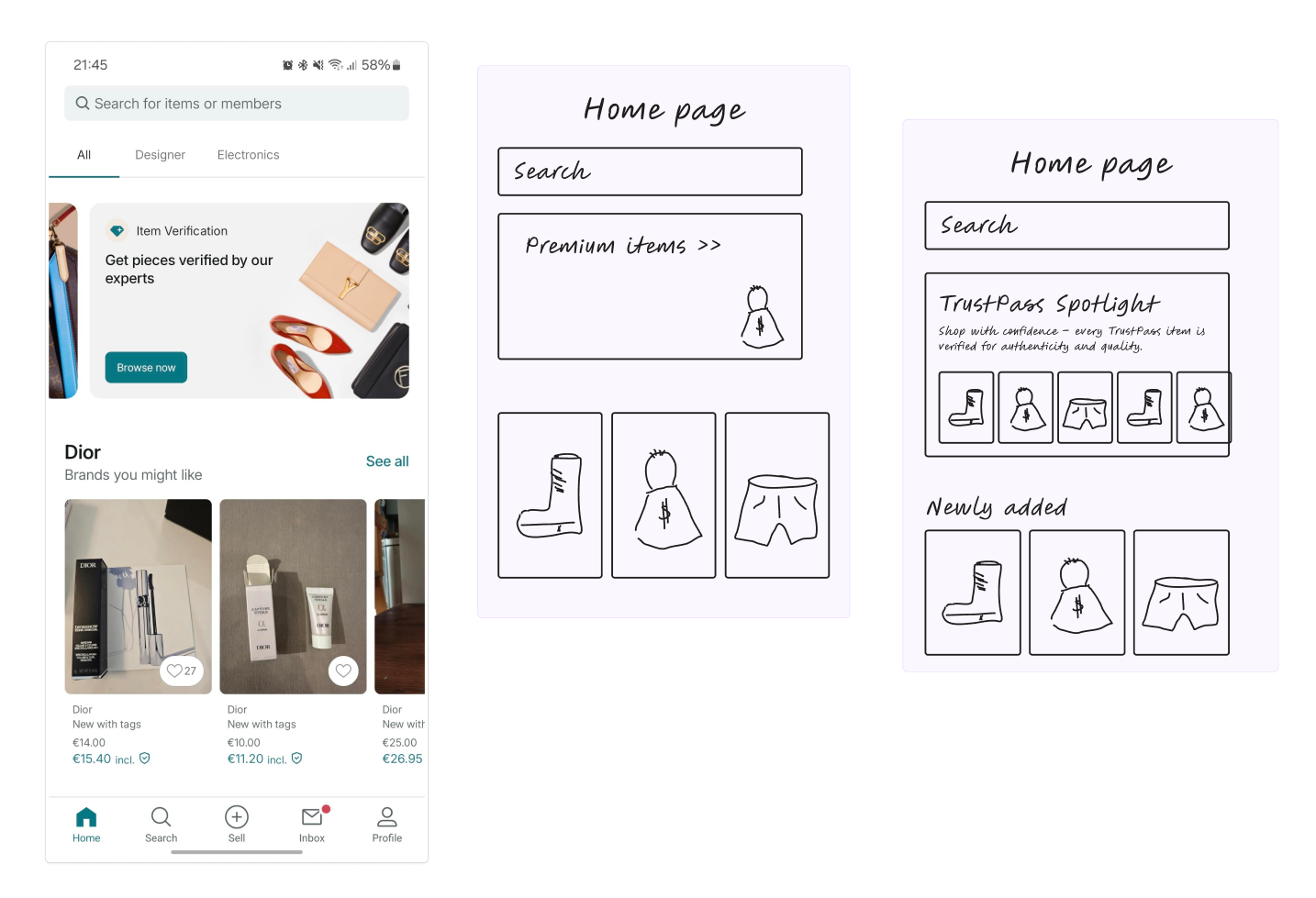

There is also a hint about the premium segment being problematic. I wanted to see if AI-generated solutions will resemble an item verification service Vinted shipped around a year ago – that seems to be addressing a similar problem.

Either way, before the marketplace imbalance sets in the form of seller frustration, our scrappy GPU-based heroes gathered to do something about it!

Design Sprint

It’s time to lay out some foundation and shared understanding of the problem. It’s time for the first exercise, called…

Experts Interviews: Getting the Context

The Decider, already briefed on the market situation, kicks things off. To add structure, it also got three handy, generic questions from AJ&Smart to answer:

- Describe what your product is?

- What is the problem your product is trying to solve?

- Who is using the product currently, and who would we like to use the product?

Reasoned about product market situation and problems for 7 seconds…

Alright, team—good morning everyone! Let’s kick off by grounding ourselves in the big picture of what’s going on in our marketplace…

o1 did a decent job summarizing the what and who, but it didn’t dig into the deeper why – why we think this buyer-seller imbalance exists. We can do better than this! I’ve asked the team to fire up thier questions.

Claude started nicely, focusing on communication, perceived premium value and power users:

You mentioned trust issues – what specific feedback have buyers given about seller trustworthiness? Can we look at where exactly those casual browsers drop off? I’m especially interested in whether the language or messaging at those points might be contributing to their hesitation.

Grok followed back with an amazing “Alright, so here’s what I’m thinking, right?”, then echoed Claude curiosity about the main reasons people are holding back. It went even further, provided way more questions, suggesting checking competitors, associated market trends, focusing on pricing transparency. Grok also wanted to know exact examples of those pain points, which is great, and the wording was sooo Grooook:

How does our current return/refund policy even work? Are there specific horror stories that we’ve received about this process?

Gemini, this little rascal, also wanted more data for each of the talking points, analytically trying to atomize the topic into smaller parts. Here’s the example for search, but it went deep into each section described by the Decider:

You mentioned search being slow and confusing. Could you give us some more concrete examples of what’s making it difficult? Is it about the filter options being unclear, too many filters, not enough, or maybe the relevance of the results they’re seeing?

DeepSeek also focused separately on each of the points, asking about patterns in user behaviour. Here’s an example digging into power vs casual users:

What specific behaviours are we seeing from casual browsers versus power users? Are there patterns in how they navigate the app, what they search for, or where they drop off? What’s stopping casual browsers from converting into buyers?

All those were asked back to the Decider, to keep the conversation going. AIs can talk with each other until the Big Chill and I have places to be, so I asked the Decider to consume all those questions, merge the mergable, and pick the most insightful and impactful 5 to answer. The selection:

- What specific concerns do buyers have about seller trustworthiness, and do these concerns vary by item type or price point?

- Why is our search process perceived as slow or confusing, and how might we optimize it for both casual browsers and power users?

- What exactly about our current return and refund process confuses buyers, and where do we see the most frustration?

- How do power users get so much value out of Vinted, and why do casual browsers tend to drop off without taking any action?

- Why aren’t mid- to high-priced listings selling, and is it purely a trust issue, or are there other perception or pricing concerns?

And an example answer to the first one:

Buyers mostly worry about (a) receiving an item in worse condition than described, (b) never receiving the item at all, and (c) authenticity for higher-priced pieces (especially designer or vintage). Right now, our platform primarily shows basic trust signals like star ratings, reviews, or how many items a seller has sold. But that might not be enough for pricier or premium goods.

Exercise takeaways

- All models were quite good at probing questions, splitting the initial context into smaller parts to investigate. This could be very useful. Preparation for a successful sprint can be a sprint on its own – LLM can review prepared data, so you can anticipate and answer questions before they are asked.

- Grok UX is just abysmal. Random links in the output, page refreshing itself, answers being randomly lost. It will be a long Monday…

Lets more to another exercise!

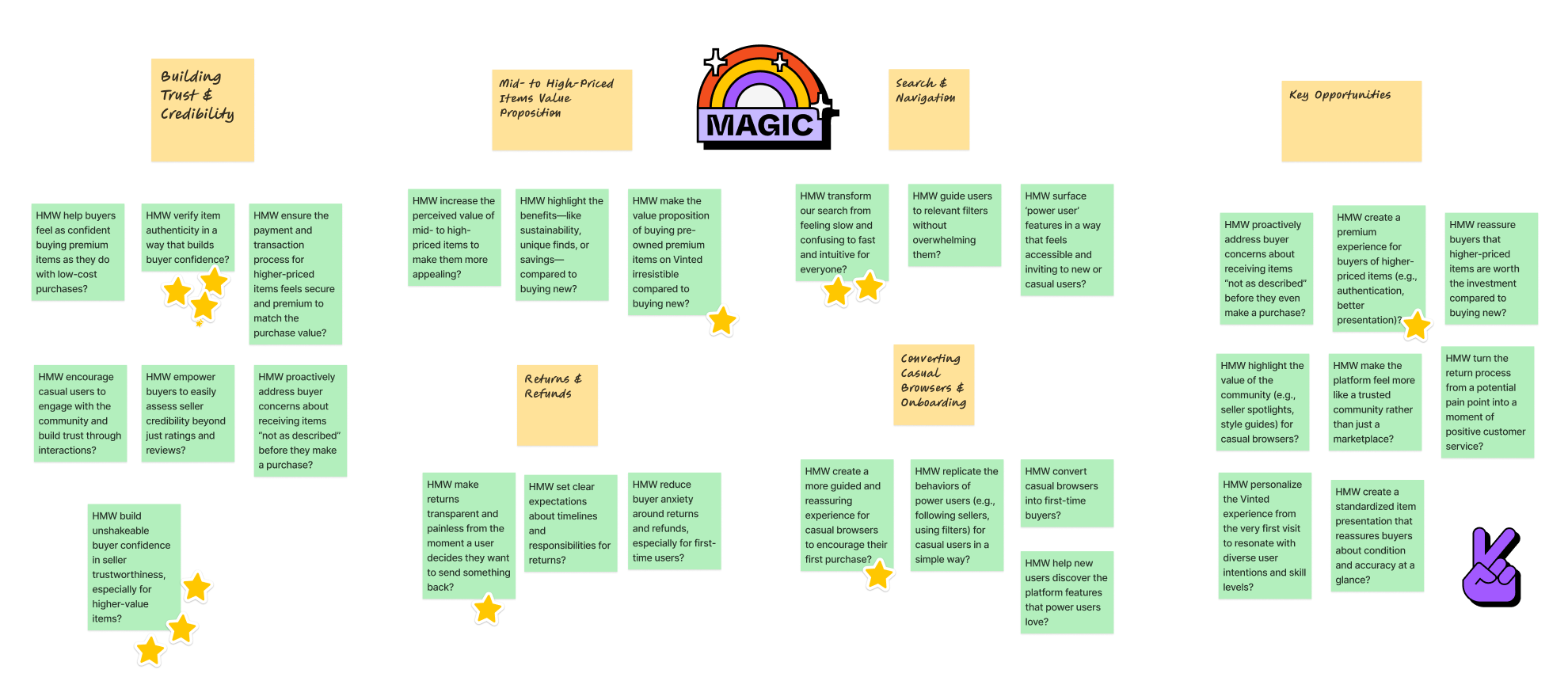

HWM: How Might We Focus?

In a human-led Design Sprint, participants take notes in the “How Might We” format while listening to expert interviews. For example, if users struggle with trust, a note might say:

HMW make purchases feel safer?

AI can do that by processing everything that was disucssed so far. Our silicon squad produced a whopping 74 HMW post-its. Hard to imagine a white board big enough, so I again asked the Decider squish similar ones, and create categories for what’s left.

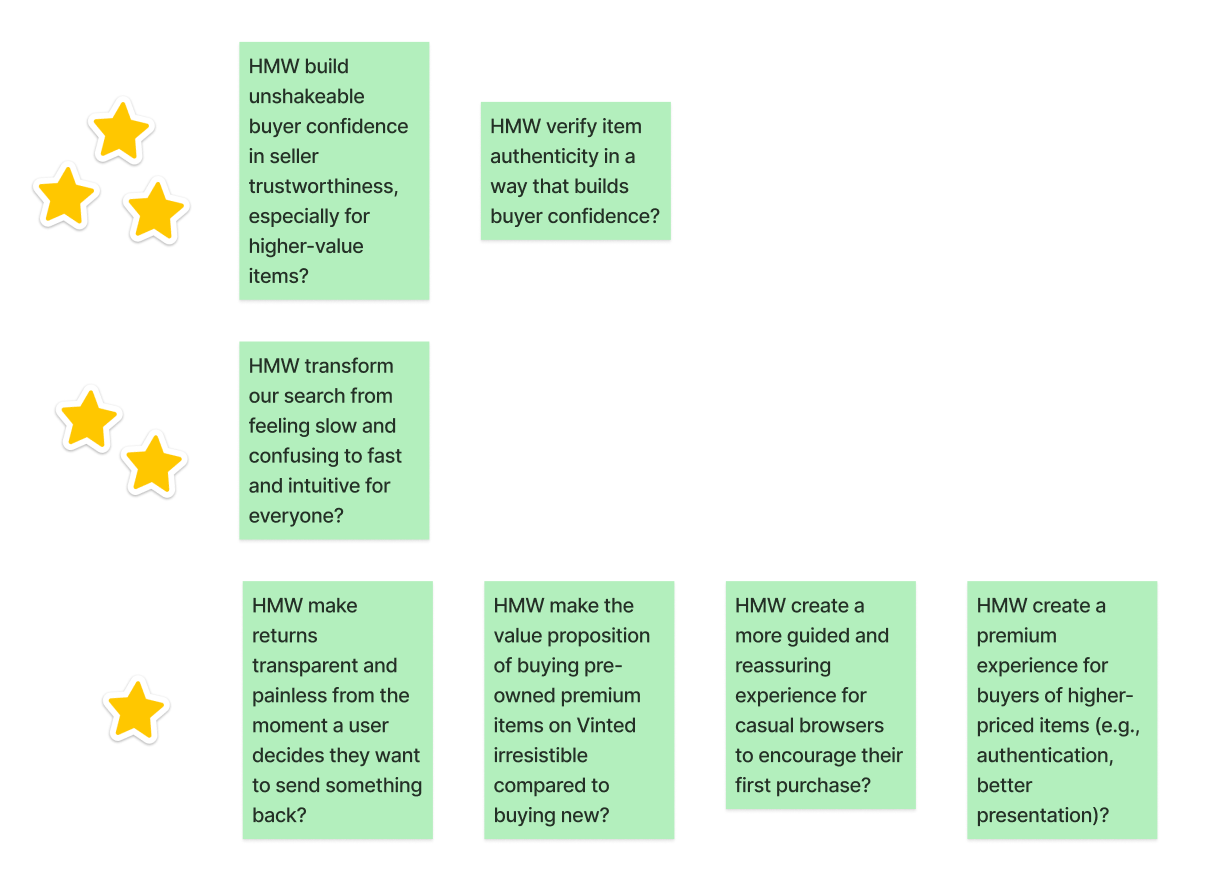

Key Opportunities category seems a bit off, but notes are there! Now let’s give the final selection to all the team members to vote! Each sprinter has 2 votes, Decider, lucky bastard, has 4.

So this is what AI want’s to focus during the sprint:

3 votes:

- HMW build unshakeable buyer confidence in seller trustworthiness, especially for higher-value items?

- HMW verify item authenticity in a way that builds buyer confidence?

2 votes:

- HMW transform our search from feeling slow and confusing to fast and intuitive for everyone?

1 vote:

- HMW make returns transparent and painless from the moment a user decides they want to send something back?

- HMW make the value proposition of buying pre-owned premium items on Vinted irresistible compared to buying new?

- HMW create a more guided and reassuring experience for casual browsers to encourage their first purchase?

- HMW create a premium experience for buyers of higher-priced items?

Exercise takeaways

- Even with clear instructions (e.g., do not focus on the solution) and examples, some AI-generated HMW notes jumped straight to solutions. Could be sub-par prompting, or maybe AI just ignores instructions, just like humans do.

- o1 did a good job merging 74 HMW notes into 28, although “key opportunities” category feels weird. On the other hand, this feels like AI cleaning up AI mess.

- Oh, did I mention that Grok UI is bad?

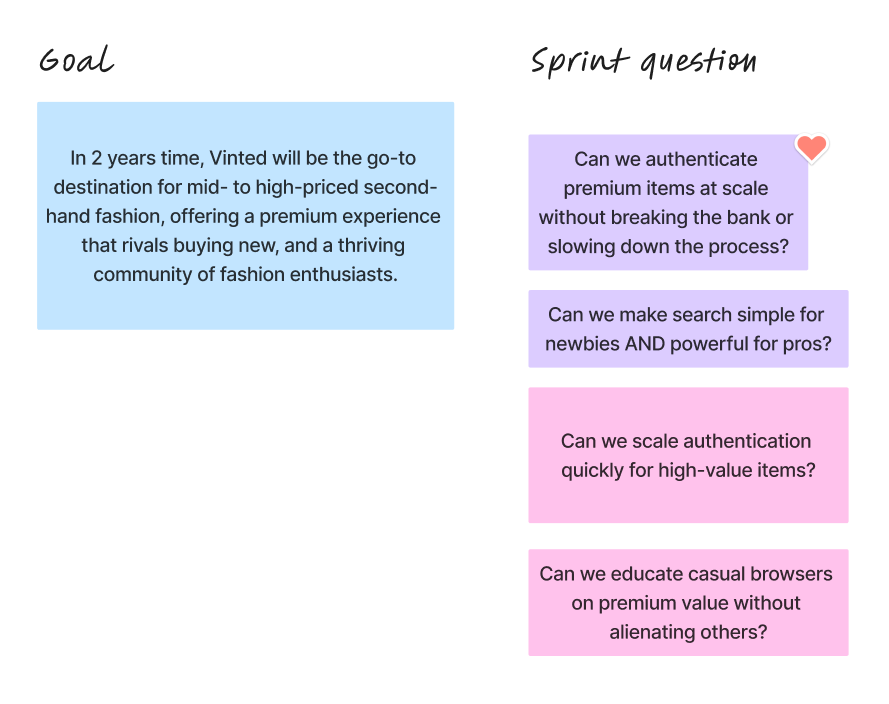

Long Term Goal: 2 Years Without a Fail

It’s time for our AI sprinters to define Vinted’s long-term goal, so answer the quesiton how the product looks like in two years, if everything goes perfectly. No roadblocks, just straight to the top, Smooth sailing all the way to victory.

Each AI team member contributed a vision or two, and then we held a vote. Before the final decision, 3 goals received a vote:

- In 2 years time, Vinted has become the most trusted destination for second-hand fashion, where buyers confidently purchase premium items as easily as they do low-cost pieces.

- In 2 years time, Vinted will have transformed casual browsers into loyal buyers, with a seamless, guided experience that makes every first purchase feel safe, easy, and rewarding, driving a 50% increase in buyer conversion rates.

- In 2 years time, Vinted will be the go-to destination for mid- to high-priced second-hand fashion, offering a premium experience that rivals buying new, with features like authentication, curated collections, and a thriving community of fashion enthusiasts.

Sadly, DeepSeek was not able to vote due to a server issue. I guess it ate something bad at lunch.

o1 weighed options for a solid 22 seconds, before puting the final and deciding vote on the third goal. Why?

It hits our immediate challenge of boosting demand in the mid-to-high price range by focusing on trust, authenticity, and a premium user experience.

It still reflects our broader marketplace vision—creating a thriving community of buyers and sellers—while emphasizing those crucial credibility signals like authentication and curated presentations.

It lays out a clear, aspirational future-state (“rivals buying new”) that pushes us to elevate the entire buyer experience, not just for high-end items but across the platform.

Ok, so we have a goal then. Let’s mess it up a bit by poking some holes in it. We’ll do that by asking the team to think about all the things that can go wrong while we pursue this goal. What challenges are waiting for us on this vaguely paved road?

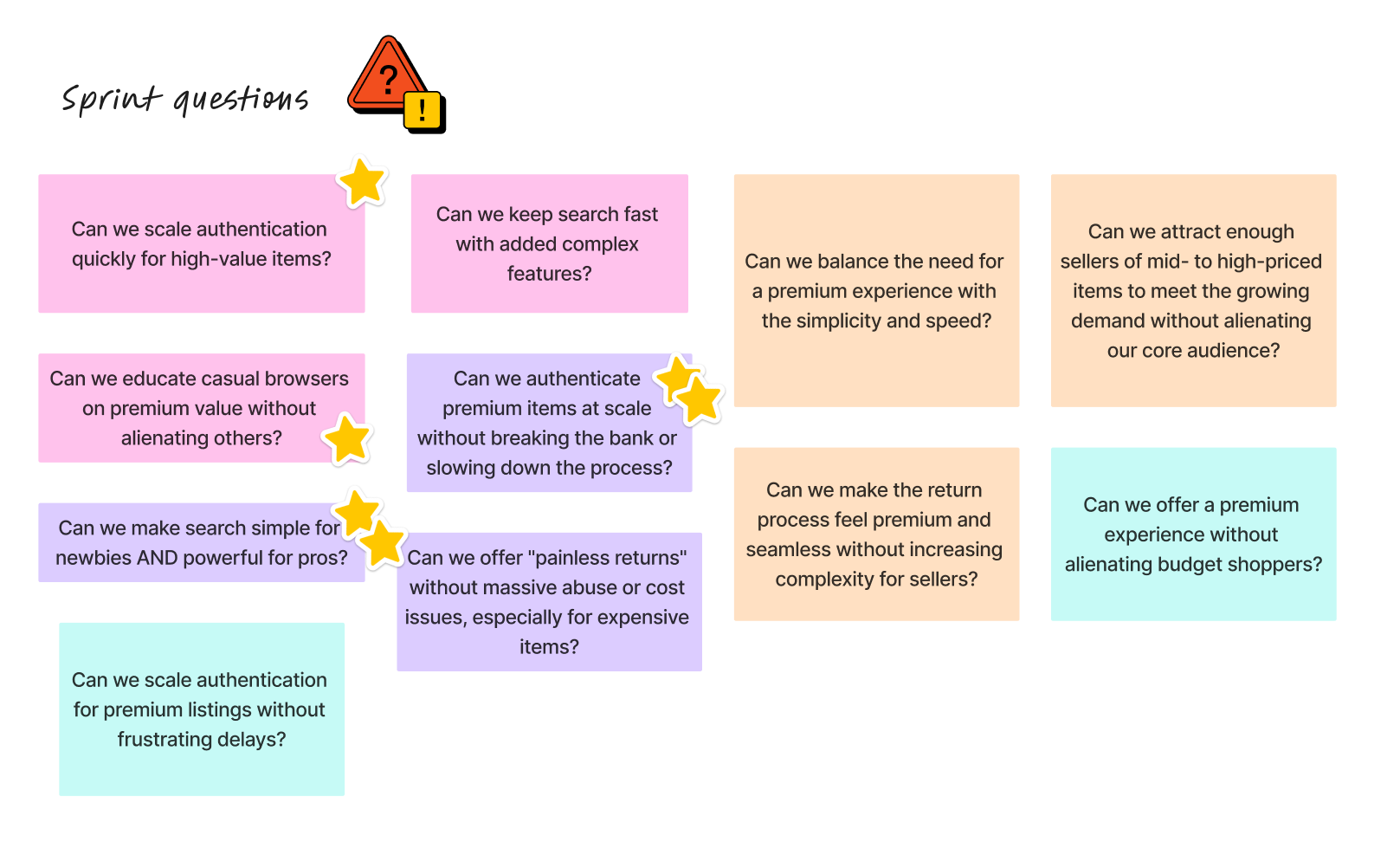

Poking the goal

Each AI team member contributed potential challenges framed as “Can we…” questions.

Sadly, while DeepSeek came back, Claude got all green on his face, and hastily exited the room mumbling “damn server issues, I’m sorry”. No more discounted sushi for lunch! The rest of the team proceeded with voting…

Based on the votes, we should focus on those questions:

- Can we make search simple for newbies AND powerful for pros? (2 votes)

- Can we authenticate premium items at scale without breaking the bank or slowing down the process? (2 votes)

- Can we scale authentication quickly for high-value items? (1 vote)

- Can we educate casual browsers on premium value without alienating others? (1 vote)

And the Decider? After thinking for 22 seconds, the 2nd question above was picked as the final one. This was the outcome of the exercise:

Seems like we need to authenticate premium items like there is no tomorrow.

Exercise takeaways

- AI loves to write. While prompting, I asked each AI to be precise and concise, limit themselves to short, singular sentences. Still, they were writing a lot. Stronger suggestion to fit the answer on a post-it note saved me a lot of mental power.

- With AI servers crashing left and right, it actually felt like real humans could be more reliable than automatons. But there is the John Henry folk story, so yeah.

With the clear goal and question to answer, let’s map our user flow!

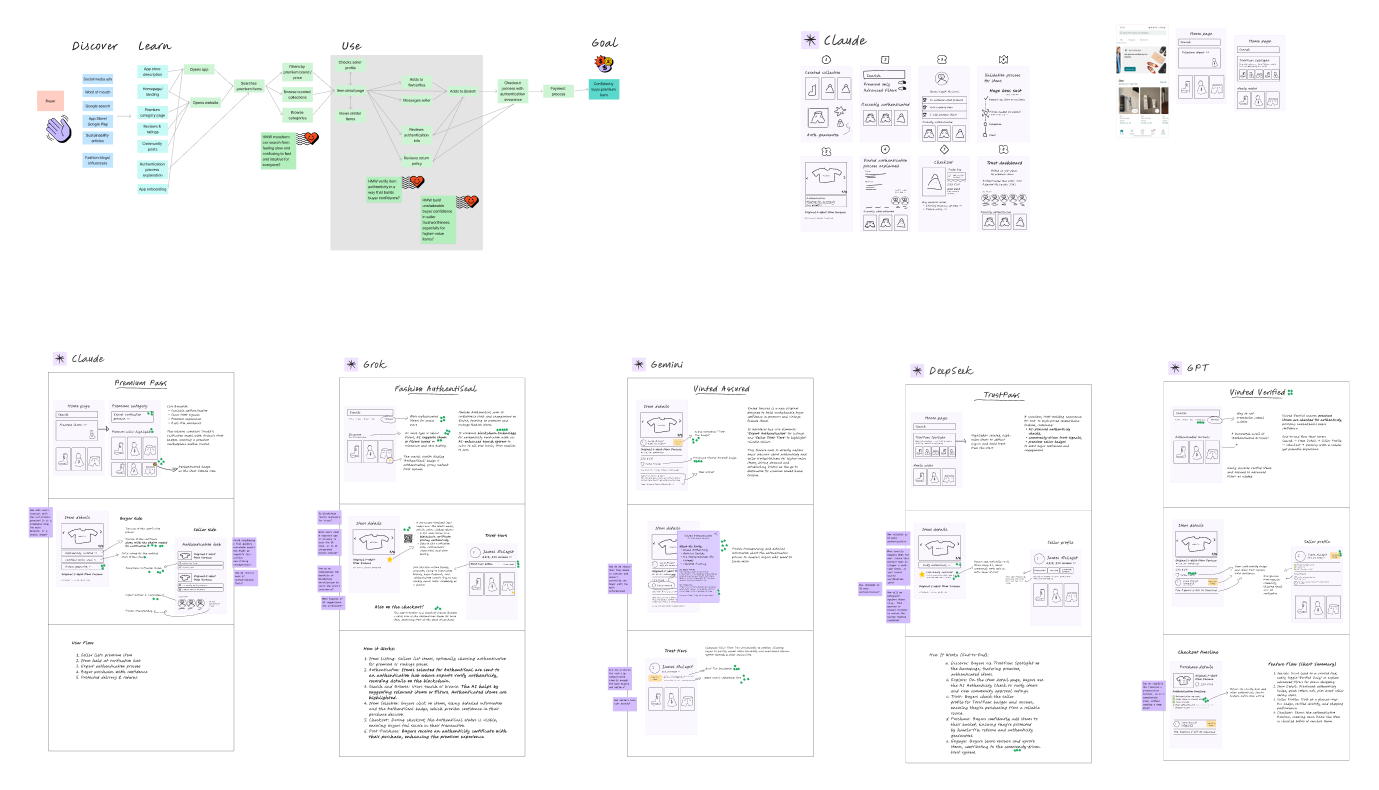

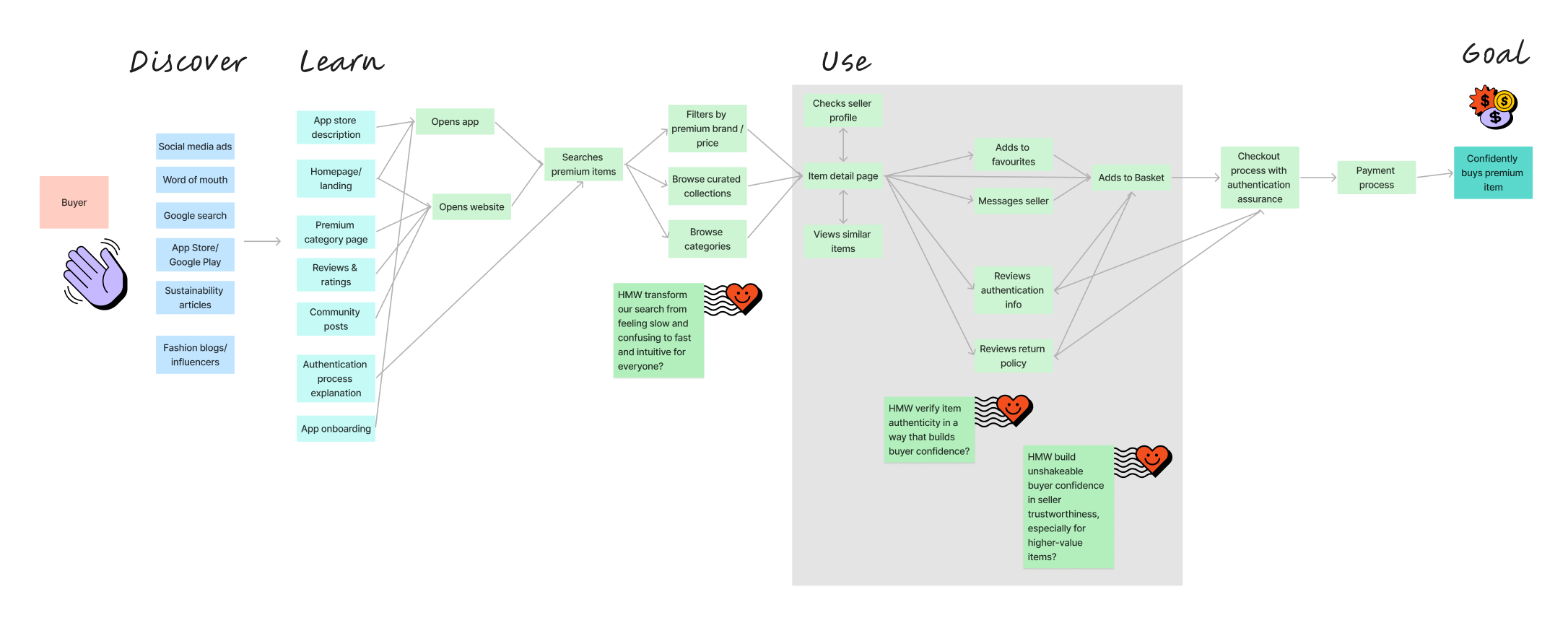

Map: Where to Attack

We’ve got our long-term goal and main challenge. Now we need to pinpoint the most impactful moment in the user journey to inject a solution.

I like Jonathan Courtney’s approach of breaking the journey into broad phases – discover, learning, using, goal. Once those are in place, the process is simple: define the user, set the goal, and connect the dots.

Buyer is the obvious user type to tackle. I’ve asked the team about the goal, and all of them responded with some variations of “Confidently buys premium item”. Consensus was also there when it comes to the steps, and soon a map was ready.

Then process asked us to put the HMW notes in focus to the corresponding part of the journey. The placement was easy-peasy, and soon this pastel cartography contemporary art piece was finished.

From the map, it looks like the best place to introduce a solution is after search—on the item or seller detail pages.

Exercise takeaways

- With this “discover, learn, use, goal” map, all the AIs were fine with identifying generic journey steps. But that’s a web/app flow. I’d be curious how AI would handle mapping a “non-traditional” user journey (e.g., industrial workflow, in-person service).

- We are still missing proper, insider knowledge about the user flow. I’m not sure how valid the map is. Fortunately, mapping isn’t critical to the overall Design Sprint outcome. It’s just a guide.

- AI can help double-check your already generated map. Provide the map to it and see if you missed something obvious. Nevertheless, it won’t replace deep user research or domain experience.

With the map paved, it’s time for Lighting Demos. All those energy hungry demons are eagerly waiting to generate some hallucinations, so let’s do it!

Lighting Demos: Borrowing Smart Ideas

Great solutions tend to follow patterns, which makes them reusable across different industries. Theory of inventive problem solving have some insane solution-finding cheat-sheets, and lighting demos are tapping to the same, solution-seeking source.

25 minutes of quick research how other companies, industries, or even nature itself solve similar problems. Write those “big ideas” on post-it notes, and share them with the team. This should inspire others when we will ideate solutions.

Let’s ask each of our sprinters to research a bit and provide 2 big ideas each…

Claude:

- “StockX’s Authentication Station” – Items are held at verification hubs, authenticated by experts, then shipped to buyers with certificates. Eliminates trust issues in premium purchases.

- “Airbnb’s Progressive Reveal” – Search starts simple, reveals advanced filters as users engage more. Adapts complexity to user expertise level.

Grok:

- “Blockchain for Trust and Authenticity” – Inspired by Cryptocurrency exchanges like Coinbase

- “AI-Powered Personalized Search and Recommendations” – Inspired by: Spotify’s Discover Weekly and Netflix’s recommendation algorithm

Gemini:

- “Expert Seal of Approval” – Think of Michelin stars for restaurants, or “Certified Organic” labels for food.

- “Seller Trust Tiers” – Think of tiered loyalty programs (airlines, hotels) or ranking systems in games.

DeepSeek:

- “Crowdsourced Authenticity Verification” – inspired by Wikipedia’s community-driven model and Reddit’s upvoting system.

- “Spotify Discover Weekly” – personalized playlist for music, but can be for any content.

o1:

- Borrow from Airbnb’s “Superhost” badge for trustworthy sellers

- Take a page from Spotify’s ‘Discover Weekly’ for search personalization

I love that Grok suggested blockchain to build trust, since web3 is going so great.

Exercise takeaways

- While the AI I’m using has limited internet access, it’s already digested most of the interesting parts of the web, so you will find some cool patterns surfaced by LLMs.

- You still need to be aware of the hallucinations, but even if the “big idea” is not real, the concept behind it can be useful in figuring out your real solution.

Let’s add all those solutions to the shared understanding of the problem, prepare a nice rundown on what is the focus here, and feed that to our AI overlords. It’s time for solution sketching!

4-Step Sketch: From Doodles to Concepts

The 4-Step Sketch phase breaks this down into:

- Notes – Recap all key findings, create a report about the current state of discussion.

- Idea Sketching – Loose brainstorming, done alone, to get creative juices flowing and lube your brain.

- Crazy 8s – Eight rapid variations or iterations of ideas from the previous step.

- Solution Sketch – A detailed concept on three A4 sheets, covering key user flows and wireframes.

Team members crreated their notes based on all the accumulated outputs. They were instructed to focus on the most important and impactful things, ideas, decisions. During the Idea Sketching, I told them to shalowly explore some ideas, looking for a good solution.

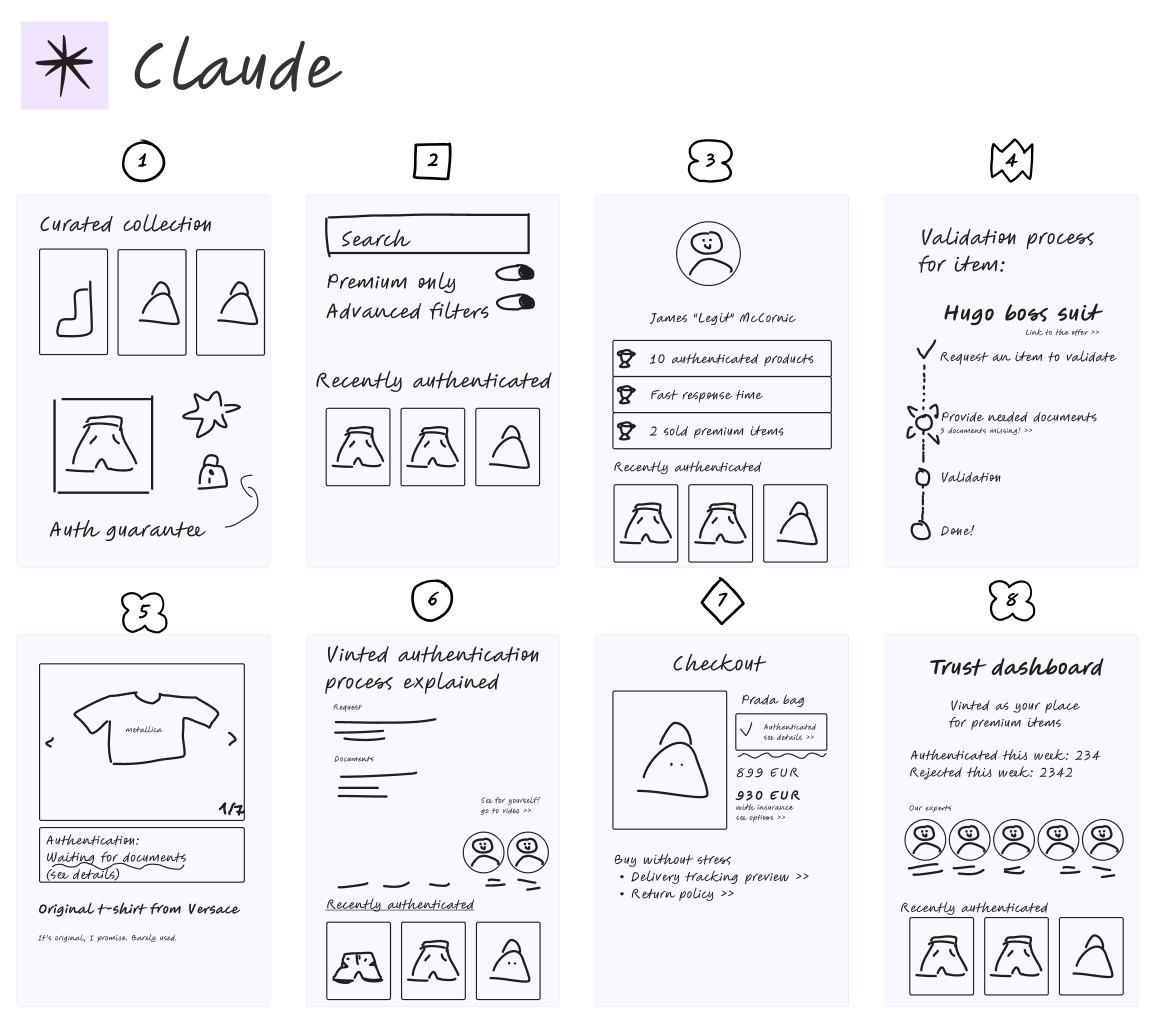

Then it was time for Crazy 8s. I’ve asked AIs to finish the task up to the best of their abilities. All team members used their words to describe the quick brain-dumps, other than Grok, who provided this:

So I asked Grok to stop messing around and also describe their ideas with words, like a normal AI.

For the lols, I’ve tried to visualize Claude creations:

With those crazy descriptions ready, it was time for the main dish of this day – a solution sketch.

Final Concepts: The Big 5

Those concepts are going to be voted on and tested out the next day. This means that each concept must be a clean, self-explanatory singular idea, with a great project name as a cherry on the top. Let’s ask our team to pick the best concepts of their Crazy 8 drafts, and while having in mind the Sprint goal and all the discussions, provide on the best, most impactful, creative, superior and clear solution.

Below you can see all 5 final concepts visualized:

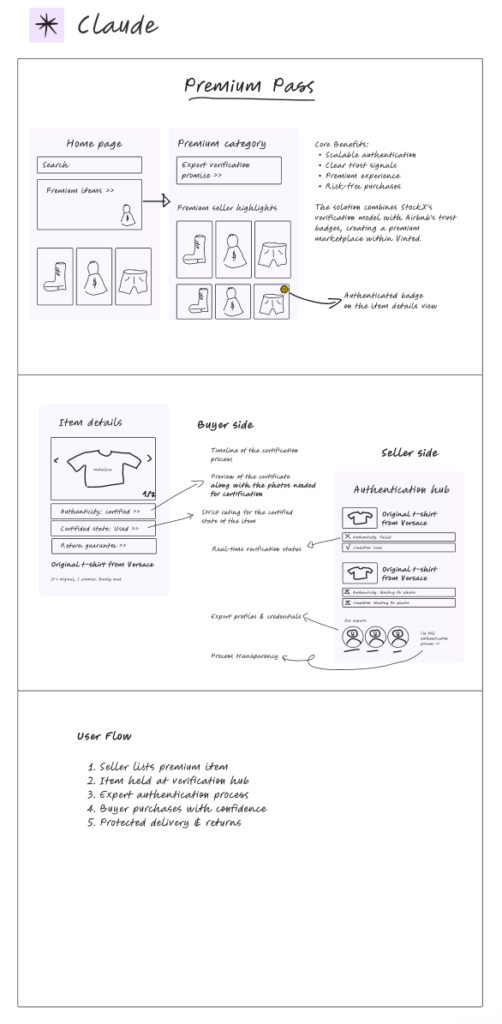

PremiumPass (Claude)

A dedicated premium shopping experience that highlights authenticated items and expert verification.

👤 For Buyers:

- New premium section: A separate entry point for premium items on the home page.

- Verification promise: Listing shows a promise button with more details about the verification process.

- Authentication badge: Authentication badges are clearly visible on items in search results.

- Detailed Verification Info: The item details page shows an authenticity certificate and high-resolution photos used in verification.

- Item Condition Ratings: Items are categorized into clear condition tiers for transparency.

📦 For Sellers:

- Expert Authentication Process: Sellers send items to a verification hub if they want the item to be verified.

- Live Verification Updates: Sellers can track the authentication progress in real-time and see expert credentials.

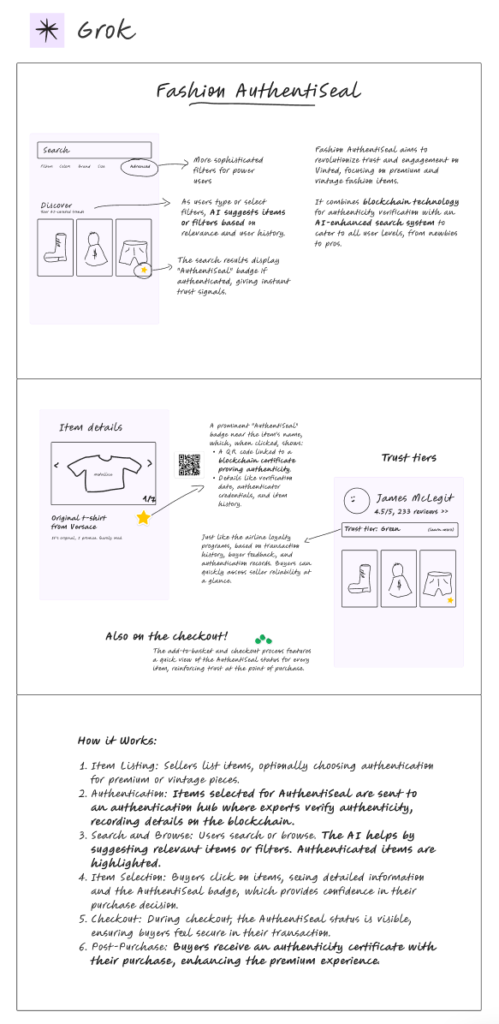

Fashion AuthentiSeal (Grok)

A blockchain-powered authentication system with AI-enhanced search and trust badges.

👤 For Buyers:

- AI-Suggested Search: Smart recommendations for items and filters when searching.

- AuthentiSeal Badge: Verified items have a prominent certification badge on the grid.

- Blockchain Proof: Tapping the badge reveals verification details (e.g., expert name, date) and a QR code linking to a blockchain-stored certificate.

- Seller Trust Tiers: Profiles display credibility levels based on history, feedback, and verification activity.

- Certificate for Buyers: After purchase, buyers receive a blockchain-backed proof of authenticity.

📦 For Sellers:

- Verification Hub: Sellers ship items to be authenticated, with results permanently recorded on the blockchain.

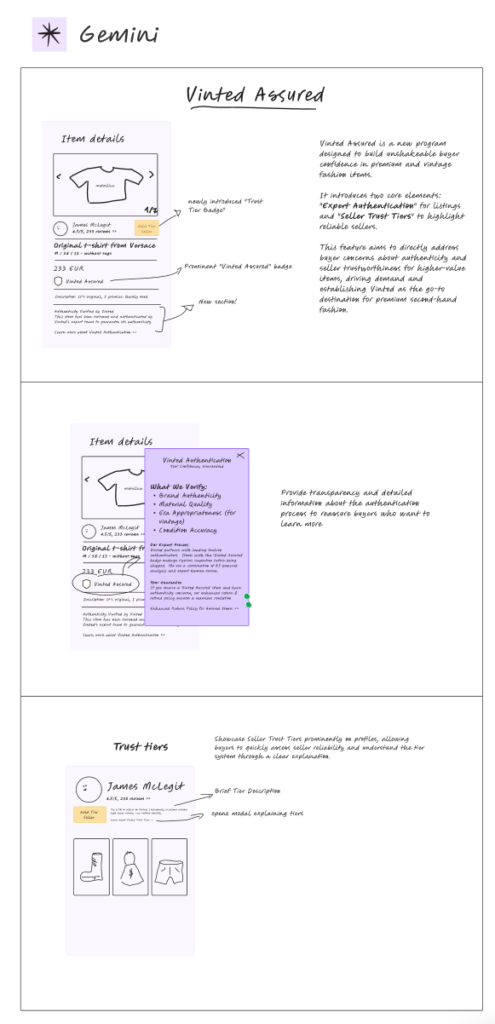

Vinted Assured (Gemini)

A trust tier system that rewards reputable sellers and offers buyers an extra layer of confidence.

👤 For Buyers:

- Trust Tiers for Sellers: Profiles and item detials pages display badges (e.g., “Gold Tier Seller”) based on sales history, ratings, or identity verification.

- Clear Seller Standards: Trust badge is accompanied with ranking meaning, e.g., “Gold Tier: Top 10% of sellers on Vinted. Consistently excellent reviews, high sales volume, and verified identity.”

- Vinted Assured Badge: A label on product pages that expands into a modal showing:

- Verification details

- Authentication process explanation

- Improved return policy for Vinted Assured items

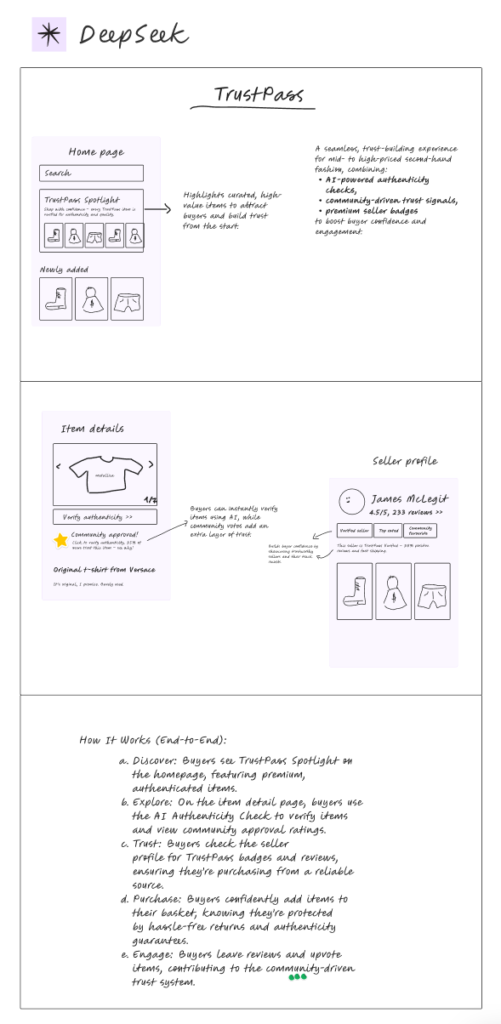

TrustPass (DeepSeek)

A community-driven authentication system powered by crowdsourced verification & AI assistance.

👤 For Buyers:

- TrustPass Spotlight: A dedicated section on the home page for verified premium items.

- Crowdsourced Authenticity Ratings: Buyers can vote on and see a trust percentage based on community verification.

- AI Verification Assistant: Buyers can use AI to analyze listing and assess authenticity.

- Enhanced Seller Trust Labels: Sellers are tagged as “Verified Seller,” “Top-Rated,” or “Community Favorite.”

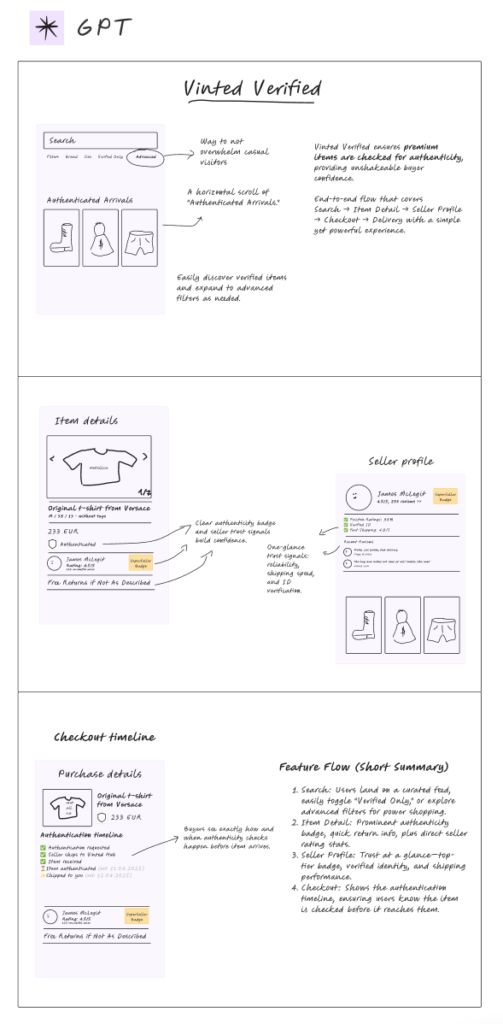

Vinted Verified (GPT-o1)

A platform-wide verification solution with search filters, authentication milestones, and trust signals.

👤 For Buyers:

- Verified-only search: A new filter allows users to see only authenticated items.

- Advanced search options: Buyers can unlock more detailed filtering settings without risking being overwhelmed.

- Authenticated Item Carousel: A home page section highlights premium-verified listings.

- Trust Signals in Seller Profiles: Expanded profile details include reliability, shipping speed, and ID verification.

- Authentication Timeline: Buyers can track authentication milestones, with estimated completion dates e.g.,

- “Seller ships to Vinted Hub”

- “Item authenticated”

Kudos to Gemini and GPT – Vinted Assured and Vinted Verified sounds nicely prestigious. Way more trustworthy than Fashion AuthentiSeal 🙈

Exercise takeaways

- Ideas became more structured but also… averaged out. Only Grok was going crazy with blockchain, but we are trying to solve user problems here, not close seed funding in 2018.

- Grok’s blockchain obsession aside, AI avoided completely ridiculous ideas. Solutions stayed, to some extent, plausible. The user flow for each concept was fairly clear, or could have been after a (heavy) series of questions.

- Another round (or seventeen) of AIs poking holes in each other concept could flesh out those needed details (e.g., why do we show QR code on a device screen? What does “verify with AI” even mean?). But when to stop patching the AI outputs?

Ok, I had my fun with Figma, but we are not here to have fun. Are those concepts useful, if we would to prototype them and test them out? Let’s check how Vinted solution looks like, so we can compare them against something already in production.

The real solution

As mentioned at the beginning, there is already a real solution that taps into this user problem. Vinted Verification is:

… an optional paid service to check the authenticity of selected designer items. The service is paid for by buyers and is free for sellers. If an item is bought with the verification service, our team of experts (…) will check whether the item is authentic.

The buyer can decide to spend €10 to verify an item. The seller is then sending the item first to a verification hub, and after experts are done with it, it’s shipped to the buyer.

It does feel like a way more refined version of some solutions from our computerized team members. Maybe with some rounds of polishing, that’s the exact solution our Sprint could deliver. At least the entry point was somehow similar…

But this polishing, the step that makes a solution intuitive, clear, and business-relevant, is user-centred and intuition-driven. Intuition build on user research, on interviewing humans, with blood flowing and neurons firing. Concepts ideated by AI were still full of holes, open, critical questions, undefined logic hidden under buzzwords. Would a human, brought in later to clean up and evaluate the concepts, be able to untangle the mess? Or by then, would the direction set by AI slop be too rigid to correct the course?

Still, AI can give us generalizations, summaries, even creative ideas, but can’t deliver stories we need for user-centric discovery. As Teresa Torres said:

(…) we are building for humans, we need to be engaging with other humans in our discovery efforts. Technology needs more humanity; not less.

We can already see the AI corroding knowledge work. Quoting a Microsoft study from this year:

(…) while GenAI can improve worker efficiency, it can inhibit critical engagement with work and can potentially lead to long-term overreliance on the tool and diminished skill for independent problem-solving. Higher confidence in GenAI’s ability to perform a task is related to less critical thinking effort. When using GenAI tools, the effort invested in critical thinking shifts from information gathering to information verification; from problem-solving to AI response integration; and from task execution to task stewardship.

With AI so easily available, the risk of outsourcing our intuition is high. We are happy that the output is sensible, without checking if it’s valuable.

That doesn’t mean AI can’t be useful in your work, or even during a Design Sprint. It just needs to be a tool, not the driver. It needs to help the team, not be the team. Still, it was a cool exploration, with some points noted below.

The Good: Where AI Worked

- ✅ Preparation: Our silicon coworkers were great in the preparation phase, poking holes in the presented data and asking for more details, where details were due. I can see teams preparing the presentation of the problem manually, then running the first review with AI, to see what can be added, explained better, removed or further researched. Uncover blind spots before they can cause issues.

- ✅ Lighting demos: That step was also interestingly refreshing. LLMs were quite handy with figuring out similar problems in other products or domains. Some “big ideas” were useful, and I’m sure they could be more precise and creative with better prompts. Having AI to figure out some creative ideas based on the current Sprint conversation can be a nice addition to the already collected demos. More juice to fuel human-based explorations.

- ✅ Crazy-8: Somehow reading about multiple, not-detailed draft solutions, and then trying to visualise them as wireframes, was a really encouraging experience. It felt like a nice mixture of market domain standards, solution drafts, and human creativity injected in the form of drawing the potential UI. You could ask AI to generate some crazy-24s, run through the list, see if there is something interesting. If nothing else, it is a nice drawing exercise.

- ✅ LLMs output similarity: For a Design Sprint kinda situation, you don’t need to use multiple models. Use the one you’re comfortable prompting. Just don’t use Grok. The UX is dreadful.

The Bad: Where AI Failed

- ❌ Lack of real user insights: There is a reason why it’s crucial to have someone with direct access to users, customers or business during a Design Sprint. UX researcher, support specialist, get the voice of the user in the room. They will shared focused insights and intuition to steer the conversation. Solutions must be innovative, but also useful, and based on real user experiences.

- ❌ Lack of diverse experience: I’ve tried to simulate diversity by describing AI personas, even adding quirks and more personal examples. Nevertheless, all AI responses were, disregarding the style, quite homogenous when it comes to the domain knowledge. In a human team, diverse experiences spark diverse ideas. AI team felt like computers talking to computers.

- ❌ Buzzwords and generalizations: Blockchain is cool and all, but is it needed here? AI is fun, but how exactly can it help solve the problem? Without intuition about our users, it’s hard to create a proper, valid use case for those solutions. Along the way, the team was adjusting their thinking, some details were added, but the ideation still felt shallow, and, pardon my French, half-assed.

Final Thoughts: Grow Your Intuition

AI is a tool, not a shortcut. It can boost efficiency, generate ideas, poke holes and fill gaps, but it should amplify your thining and intuition, not replace it.

Do the ground work. Do your research. Talk to users. Check what’s buzzing in support cases. Grow your intuition.

In the end, the best product-related prompt engineering you can do is to deeply understand your users.

Cheers!

Footnotes:

- Vinted shipped their verification process after the training data cut for gathered AIs. AIs had no internet access, and confidently told me that it was a novel problem for them. They probably lied.